While large hyperscale and cloud data centers are now adopting 400 Gigabit applications, and large enterprise data centers are just beginning to evaluate their options to migrate to 400 Gig over the next few years, these next-generation applications and the upcoming 100 Gb/s per lane PAM4 signaling technology have set the stage for future 800 Gig and 1.6 and 3.2 Terabit applications.

While it may be hard to fathom needing 800 Gig data center speeds, recent predictions are that data center traffic will grow to more than 181 Zettabytes over the next four years. Large hyperscale and cloud data centers will therefore need 800 Gig and eventually 1.6 and 3.2 TBit to transmit massive amounts of data between switch tiers, such as between leaf and spine switches or in data center interconnects that connects multiple data centers to share data, support load balancing, and/or provide redundancy.

Let’s take a look at how the first iterations of 800 Gig based on current 400 Gig logic might shape up, what the future holds for TBit applications, and the key challenges that need to be overcome.

A Foundation to Build On

We can’t discuss what 800 Gig applications will initially look like without considering 400 Gig applications that create the foundation. Based on 100 Gb/s per lane PAM4 signaling, the following table shows how 400 Gig data center applications can easily transition to 800 Gig.

|

400G Applications |

# Of Lanes |

Connector Interface |

800G |

# Of Lanes |

Connector |

|

MULTIMODE |

|||||

|

400GBASE-SR4 |

4 |

MPO-8/MPO-12 |

800GBASE-SR8 |

8 |

MPO-16/MPO-24 |

|

400GBASE-VF4 |

4 |

MPO-8/MPO-12 |

800GBASE-VR8 |

8 |

MPO-16/MPO-24 |

|

SINGLEMODE |

|||||

|

400GBASE-DR4 |

4 |

MPO-8/MPO-12 |

800GBASE-DR8 |

8 |

MPO-16/MPO-24 |

|

400GBASE-FR4 |

4 |

Duplex |

800GBASE-FR8 |

8 |

Duplex |

To support 800 Gig applications, specifications for 8-lane QSFP-DD and OSFP pluggable transceiver modules developed for 400 Gigabit at 50 Gb/s per lane signaling are being updated to 100 Gb/s per lane signaling. That means that upcoming QSFP-800 and OSFP 800G pluggable optical modules will continue to maintain backwards compatibility with existing QSFP and OSFP form factors for maximum flexibility.

While the IEEE has not yet defined a MAC data rate for 800 Gb/s, the IEEE Beyond 400 Gb/s Ethernet Study Group has also already developed objectives for 800 Gig at 100 Gb/s per lane signaling based on 400 Gig logic. These objectives include:

- Over 8 pairs of multimode fiber to at least 100 meters

- Over 8 pairs of singlemode to at least 500 m

- Over 4 pairs of singlemode to at least 500 m

- Over 4 pairs of singlemode to at least 2 km

- Over 4 wavelengths on a single singlemode fiber in each direction to at least 2 km

While 800 Gig is expected to drive the need for MPO-16 connectivity, the first deployments will likely be breakout applications, such as 8X100 Gig, 4X200 Gig, and 2X400 Gig using small form-factor connectivity such as SN and MDC connectors as described in our previous blog for 400 Gig breakouts.

Terabit Speeds Bring New Challenges

While 800 Gig is essentially capable based on 400 Gig logic, hyperscale data center providers like Google, Facebook, Microsoft, and others are already hard at work within Multi Source Agreement (MSA) groups to develop innovative optical technologies to support 1.6 and 3.2 TBit.

The OSFP MSA is working on a 16-lane OSFP-XD pluggable transceiver module to support 1.6 TBit at a 100 Gb/s lane rate. While this could potentially be achieved over 32 fibers for parallel optics using two MPO-16 connectors or one MPO-32 connector, this configuration would result in very high-density connectivity and an increased amount of fiber that will require increasing rack space. It could also potentially be achieved using WDM technology where multiple wavelengths transmit at 100 Gb/s on a single singlemode fiber in each direction, creating the potential for supporting 1.6 TBit over fewer pairs of singlemode fiber.

Achieving 1.6 TBit using pluggable modules however creates significant power consumption and cost challenges due to the electrical channel between the module and the chip (i.e., application specific integrated circuit) of the switch. Another potential technology for 1.6 TBit is co-packaged optics (CPO) that consists of a switch assembly with multiple optical interfaces at the chip. By bringing the optics closer to the chip, power consumption can be significantly reduced. While the reduced power consumption provides a strong incentive to use CPO technology, it is significant transition away from the industry’s preferred pluggable transceiver technology that facilitates interoperability and eases scalability since modules are accessible from the front of the switch panel.

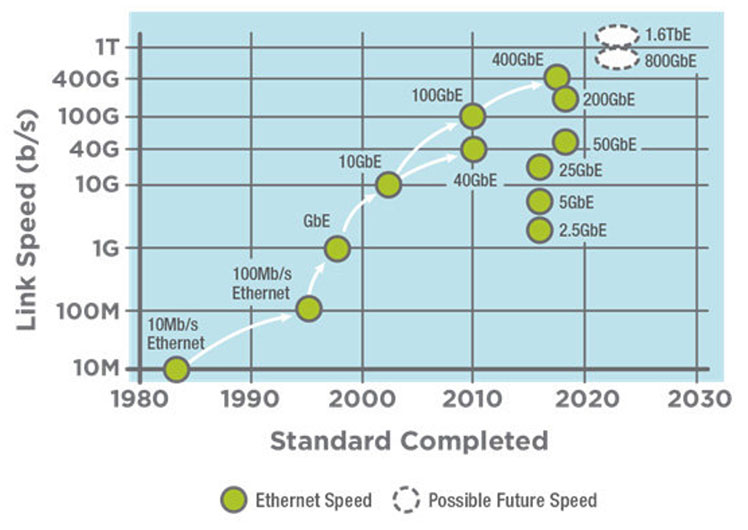

At this point in time, it’s anyone’s guess how Tbit applications and deployments will shape up, and it’s likely another 3-to-5 years out before we see if pluggable transceivers versus CPO win out. Either option will however require much higher densities. In the meantime, ongoing advancements could change the outcome. For example, while difficult to achieve from a signal-to-noise ratio perspective, and one that will therefore require shorter lengths, the development of a 200 Gb/s per lane signaling would have a massive impact. At 200 Gb/s late rate, the number of lanes required for 800 Gig could be cut in half, bringing us back to just four lanes and MPO-8/MPO-12 connectivity. And that means 1.6 TBit could be achieved over just 8 lanes, and 3.2 TBit could be achieved over 16 lanes. The graph below from Ethernet Alliance 2020 roadmap shows that 3.2TB is not too far out!

The good news is that you can trust CablesPlus USA to continue monitoring 800 Gig and future TBit developments. And our ability to deliver the highest density in a 1U with our HD8² ultra-high-density patch panel with support for small form-factor SN and MDC connectivity, as well as MPO-16 connectivity, means that we will be ready and able to support these applications as they come to fruition. If you'd like to talk through your specific application or for guidance, we can do that too! just contact us at sales@cablesplususa.com or 866-678-5852