Top 5 Infrastructure Considerations for a Successful Data Center Upgrade

- Oct 30th 2025

The demands on your data center have never been greater—from supporting modern AI-driven workloads to delivering faster speeds and reducing soaring energy costs. But embarking on a data center upgrade without meticulous infrastructure assessment and preparation is the quickest path to failure. Insufficient planning and overlooking key infrastructure considerations can lead to common pitfalls that extend your go-live date, compromise uptime, limit efficiency, and sabotage your long-term scalability — ultimately costing you more in the long run.

To help you smoothly navigate the high-stakes process of upgrading your data center, we’ve compiled the top 5 critical infrastructure questions to ask during the planning phase.

1. How Much Power and Cooling Capacity is Needed?

Whenever you upgrade or add new IT equipment (like servers and switches) to handle higher workloads, reduce latency, improve virtualization, or all the above, it’s essential to understand the impact on power and cooling and make sure your data center can support the total IT load.

Every new piece of equipment should have a nameplate specifying its maximum power consumption in watts (if only amps are listed, multiply amps by voltage to get the watts). This figure is essential for determining rack power, which in turn dictates the rating of your uninterruptible power supplies (UPS). Suppose a rack lacks sufficient power to support new equipment. In that case, you may need to upgrade your rack power feed, find a different location with available power, or free up power by removing or relocating non-essential equipment.

Within each rack, primary and secondary power distribution units (PDUs) must deliver the required voltage and amperage and provide the correct number and type of receptacles for the equipment. Your PDUs should also provide ample power and receptacles for future growth. If your equipment upgrades dictate new PDUs, consider intelligent PDUs, such as metered PDUs that provide real-time load status, monitored PDUs that enable remote power and environmental monitoring, or switched PDUs that offer both remote monitoring and individual outlet control.

To ensure data center reliability, you must also consider cooling requirements. Most equipment will have a BTU/hour value on its nameplate, which measures the rate of heat energy production. If the value is not provided, you can easily calculate it: 1 Watt equals 3.412141633 BTU/hour. To check if your cooling systems are appropriately sized, convert the total BTU/hours into tons of cooling by dividing by 12,000. Even if your data center has the cooling capacity to handle total IT heat loads, equipment that generates intense heat — like GPUs used in AI applications — may require more advanced cooling technologies like rear-door heat exchangers or direct-to-chip liquid cooling to avoid hot spots that can damage equipment.

2. What is the Loss Budget for the Application?

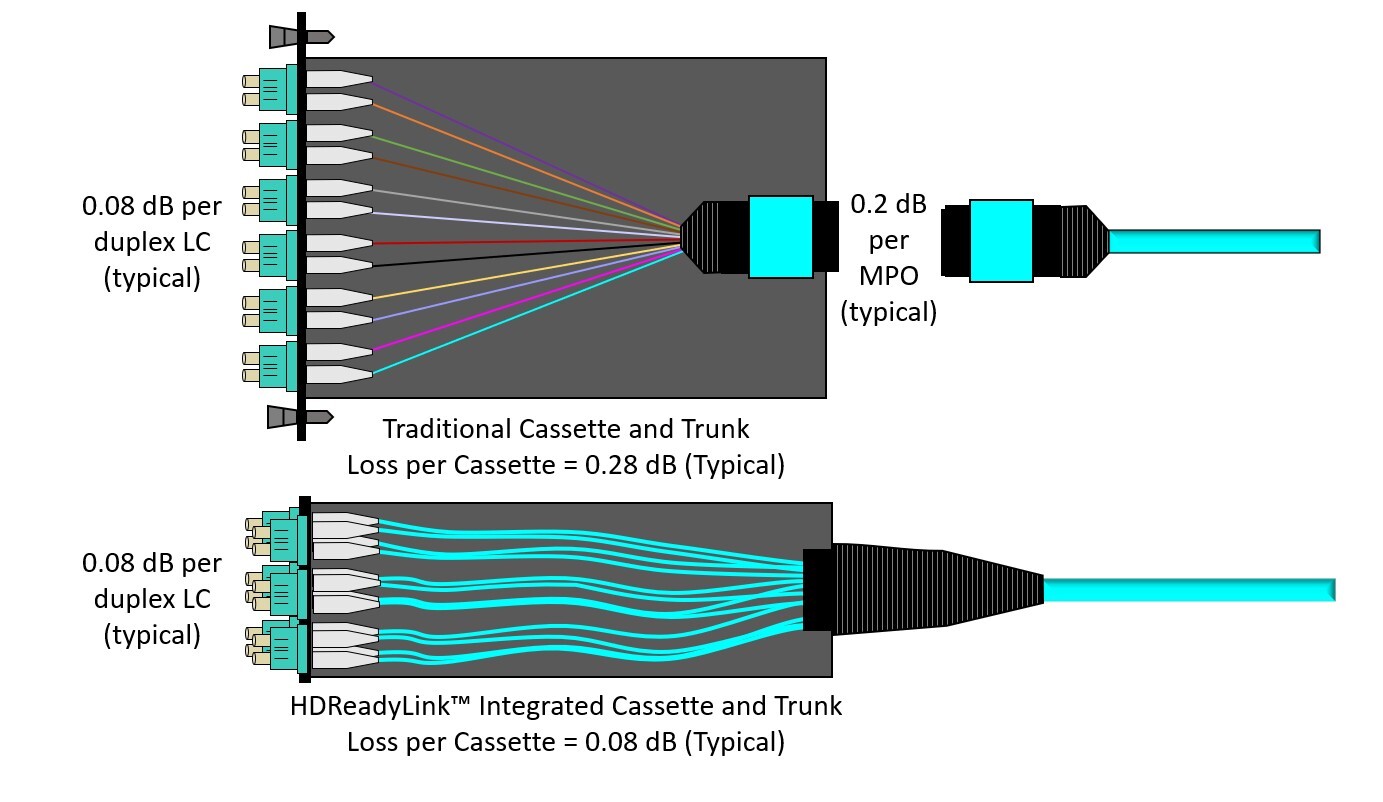

When upgrading switches, servers, or both, you need to know the maximum channel insertion loss for each fiber application you plan to run. To ensure your fiber links can support the application, you need to calculate your loss budget based on the fiber cable's length and loss, as well as the loss of every planned connection point (connectors, splices, etc.) within the channel.

When determining your loss budget, be sure to add headroom to account for real-world installation variables, future reconfigurations, and transceiver degradation. If your planned design exceeds the application’s maximum insertion loss, you may need to reduce the link length or eliminate some connection points. Choosing lower-loss fiber components like our pre-terminated HD8² HDSwitchReady Cassettes that integrate cables and eliminate rear MPO connections can help increase headroom and allow for more connection points.

3. Are Both Current and Future Bandwidth Needs Accounted For?

It is prudent to consider potential future bandwidth needs when planning an upgrade. Designing your fiber links to support future speeds enables your fiber infrastructure to support multiple generations of equipment, significantly reducing future expenses.

Accounting for future bandwidth needs can impact your link lengths, loss budgets, and the type of components you choose. For example, consider the following loss and length differences between 8-fiber 100GBASE-SR4 and 400GBASE-SR4 applications. Even if you’re only deploying 100GBASE-SR4 today, designing for the 400GBASE-SR4 application with stricter loss/length limits would be wise to avoid expensive infrastructure replacements or reconfigurations down the line.

|

100GBASE-SR4 |

400GBASE-SR4 |

|||

|

OM3 |

OM4 |

OM3 |

OM4 |

|

|

Maximum Channel Length |

70 meters |

100 meters |

60 meters |

100 meters |

|

Maximum Insertion Loss |

1.8 dB |

1.9 dB |

1.7 dB |

1.8 dB |

4. What Type and Density of Connectivity is Required?

The application and required port density determine the type of connectivity needed for your fiber infrastructure.

First and foremost, singlemode applications require singlemode cables and connectors, while multimode applications require multimode cables and connectors. You also need to consider the transmission type. Applications using parallel optics that transmit and receive on multiple fibers require multi-fiber MPO/MTP connectivity. In contrast, singlemode wavelength-division multiplexing (WDM) applications that transmit and receive on multiple wavelengths use duplex fiber connectivity.

For parallel optics, the number of fibers depends on the application. SR4 and VR4 multimode applications, as well as DR4 singlemode applications, require 8-fiber MPO/MTP connectivity (4 fibers transmitting and 4 receiving), while SR8, VR8, and DR8 applications require 16-fiber MPO/MTP connectivity (8 fibers transmitting and 8 receiving). You also must consider the fiber end face. While all singlemode MPO/MTP connectors use angled physical contact (APC) end faces, multimode MPO/MTP connectors can be UPC or APC. However, higher-speed 400 and 800 multimode applications are more susceptible to reflections and necessitate multimode APC MPO/MTP connectors.

If you’re using breakout applications, where a single high-speed switch port breaks out to support multiple lower-speed switches or servers, you must plan for both ends of the link. For example, to support 4 or 8 duplex connections from an 8-fiber or 16-fiber switch port, you’ll need the appropriate MPO/MTP connector at one end and MPO/MTP to duplex cassettes or breakout cable assemblies at the other end. You can also leverage high-speed 16-fiber switch ports to support two 8-fiber connections, such as using an 800 Gig switch port to connect two 400 Gig switches or servers. This scenario requires breakout assemblies with a 16-fiber MPO/MTP connector on one end and two 8-fiber MPO/MTP connectors on the other end.

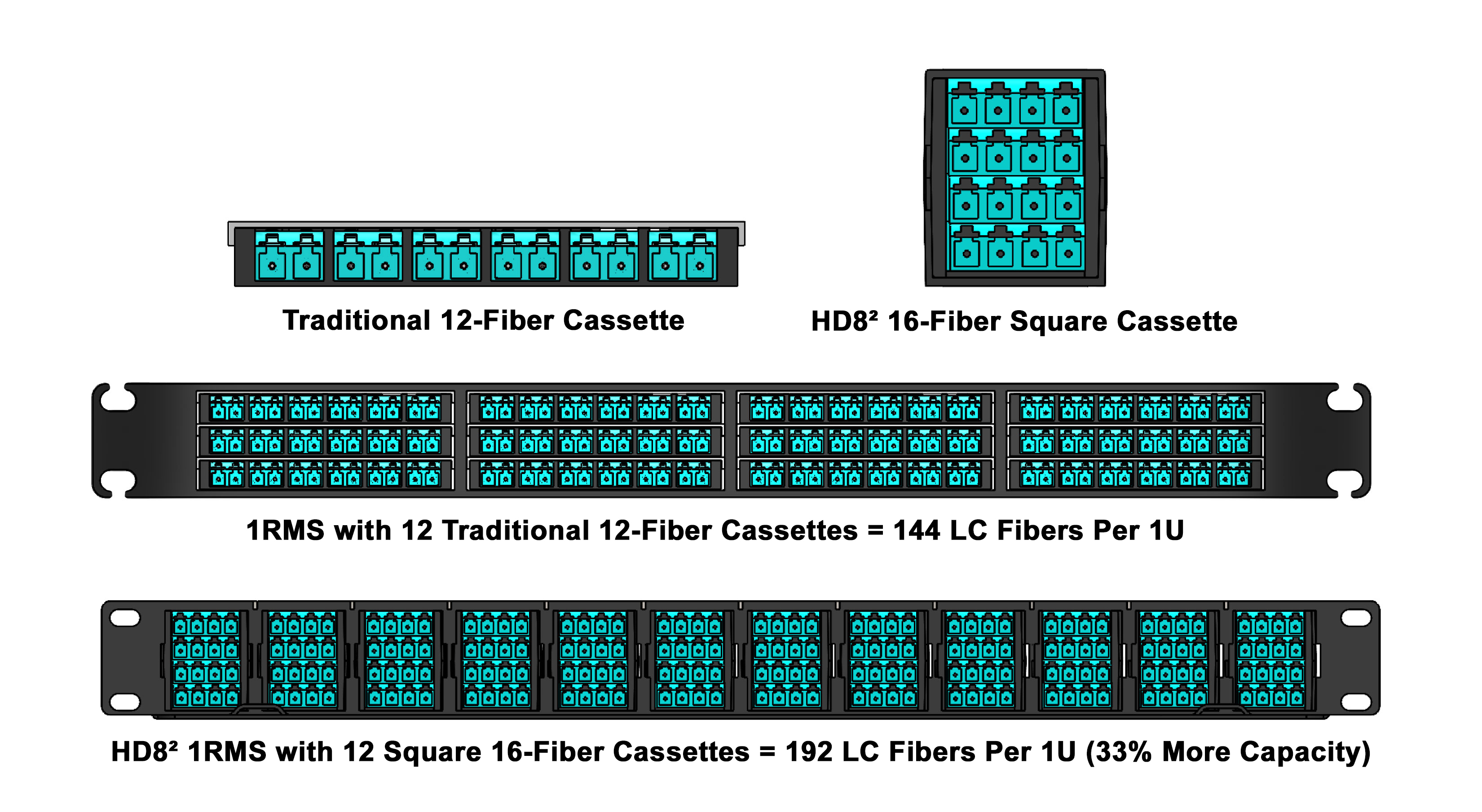

Required density also impacts the fiber connectivity type. For high-density patching areas, look for patch panels that utilize a reduced-footprint square cassettes design instead of traditional wide, flat cassettes. For instance, our award-winning HD8² High Density Fiber System uses this design to support 96 LC duplex connectors (192 fibers) in just 1U of rack space. This represents a 33% capacity increase over traditional patch panels, which support only 72 LC duplex connectors (144 fibers).

You can also consider very small form factor (VSFF) fiber connectors to increase the number of connections within a given footprint. For duplex connectivity, SN and MDC VSFF connectors are more than 70% smaller than LC duplex connectors. For instance, an 8X100 Gig breakout application supporting 512 duplex 100 Gig connections would require six patch panels using traditional LC but only three patch panels using SNs or MDCs. When you combine these VSFF connectors with a reduced-footprint square cassette design, you get even higher densities. Our HD8² High Density Fiber System supports 16 SN or MDC duplex ports per cassette, equating to 192 duplex ports in a single rack unit.

For multi-fiber applications, SN-MT and MMC connectors offer nearly three times the density of MPO/MTP connectors. Our HD8² cassettes accommodate 8 ports using traditional MPO/MTP connectors. However, they support 16 ports using SN-MT or MMC connectors (up to 256 fibers per cassette). With a chassis capable of holding 12 HD8²cassettes, the HD8² High Density Fiber System can theoretically support 192 SN-MT or MMC connectors (up to 3,072 fibers) in a single RU. (Check out our previous blog on MTP/MPO vs. MMC connectors for more information.)

5. What is the Impact on Cable Pathways and Routing?

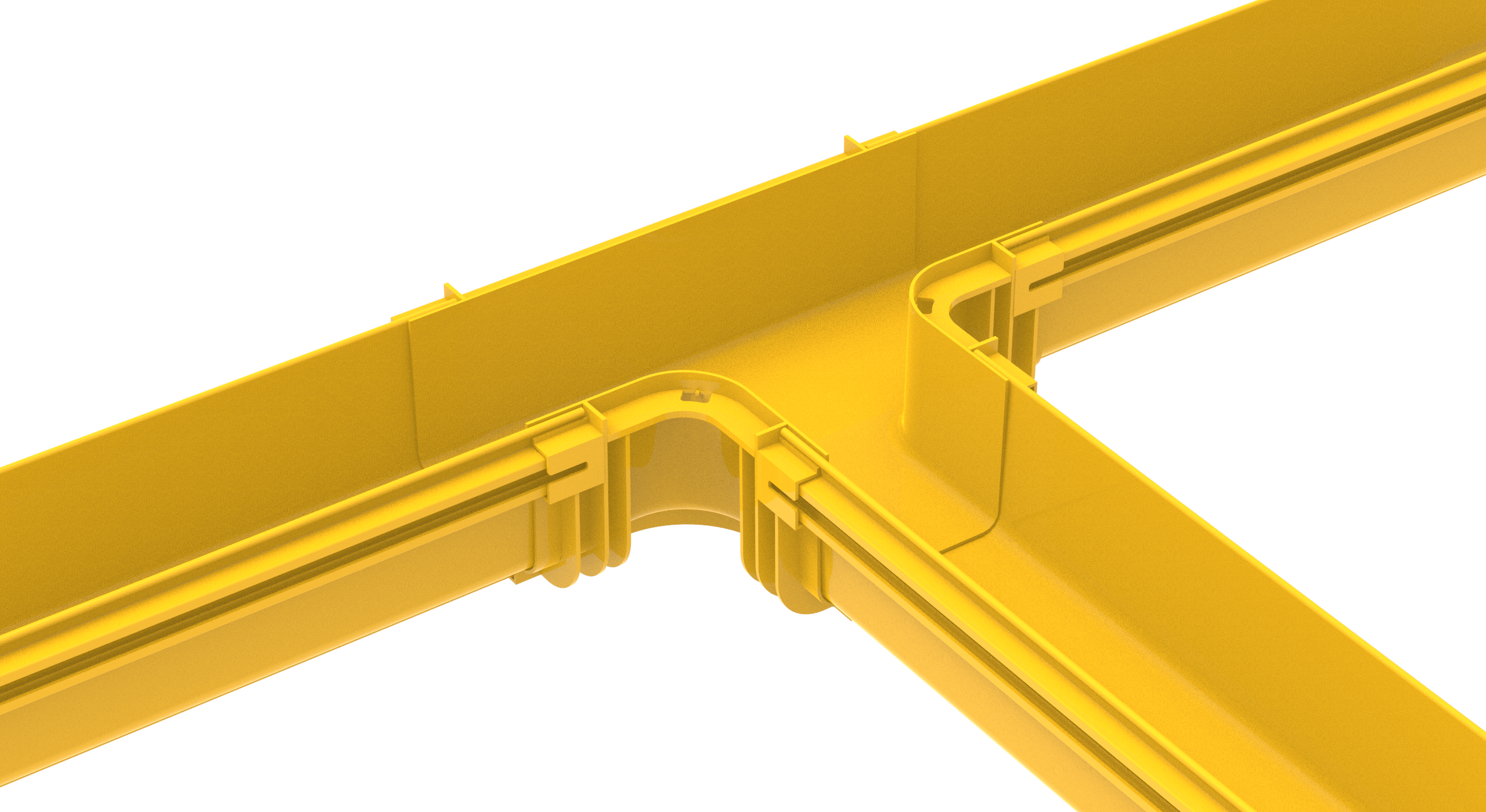

Proper planning for a data center upgrade must also ensure sufficient capacity and protection for fiber cables routed between and within racks and cabinets. Pathways must support the number and weight of cables without overcrowding. Overfilled pathways and cable managers can restrict airflow and complicate maintenance. Pathways should also allow room for future growth while providing easy access for moves, adds, or changes. It's also vital that pathways protect fiber cables by providing separation from copper cables and maintaining the proper bend radius of the cable throughout the entire route, including where cables enter and exit the pathway.

Cable tray and ducts designed explicitly for fiber are ideal for overhead cable routing in the data center. When selecting fiber cable tray and duct, look for solutions with a variety of fittings to accommodate various routes and transitions, and consider tool-less designs to simplify field installation.

Cable tray and ducts designed explicitly for fiber are ideal for overhead cable routing in the data center. When selecting fiber cable tray and duct, look for solutions with a variety of fittings to accommodate various routes and transitions, and consider tool-less designs to simplify field installation.

For underfloor pathways, consider using plenum-rated corrugated innerduct to isolate fiber and prevent damage. Look for innerduct with pre-installed pull tape or line and sequential footage markings to facilitate installation. Innerduct available in various colors can also help identify fiber used for various applications and/or networks, such as using distinct colors for backbone switch links, storage area network connections, and server connections. Just like with overhead fiber cable and duct, it’s essential to choose an innerduct size that provides space for future growth.

The good news is that Cables Plus is your trusted partner to help you get ahead of potential infrastructure issues when planning your data center upgrade. From power distribution and insertion loss requirements to connectivity and pathways, our team of fiber optic experts is ready to help you deliver data center infrastructure that enables smooth, seamless upgrades while optimizing performance and long-term scalability. Contact us today to speak with a Cables Plus expert.

EXPLORE OUR COMPLETE RANGE OF FIBER SOLUTIONS Speak to an Expert