Navigating GenAI Connectivity in Backend Networks

- Jul 2nd 2025

Understanding GenAI Connectivity in Backend Networks

Hyperscale, cloud, neocloud, and large enterprise data centers are quickly adopting generative artificial intelligence (GenAI) to transform everything from business operations and customer service to scientific discovery and innovation across a wide range of industries. This isn’t just a trend: Gartner predicts global spending on GenAI will hit $644 billion in 2025, a massive increase of 76.4% from 2024.

Supporting data- and compute-intensive GenAI workloads presents several unique networking requirements compared to traditional data center networks that handle general-purpose workloads. To avoid pitfalls and ensure manageability, scalability, and performance, it’s crucial to understand how GenAI networks function, the various connectivity options, and key considerations.

Where Essential Training Happens

GenAI models, the technology behind powerful tools like ChatGPT, operate in two distinct phases: training and inference. Understanding these phases is key to grasping the critical role of the backend network.

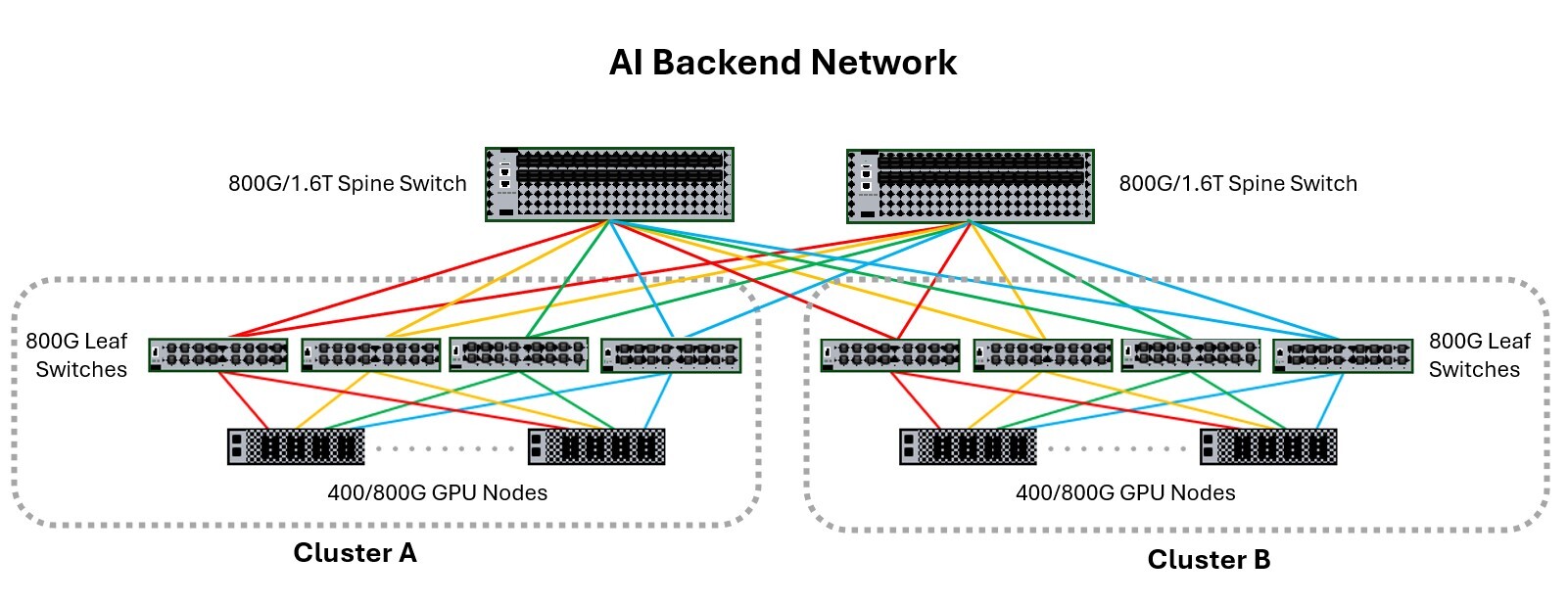

Training involves the model learning new capabilities by analyzing massive amounts of data. This intensive training takes place in dedicated backend networks that are designed for high-performance computing. Here, clusters of high-performance GPUs leverage accelerated parallel processing to pull in and analyze massive datasets. These GPUs are housed in “nodes” (typically 4 to 8 GPUs per node), alongside traditional CPUs, memory, and storage. Data centers can host multiple clusters of various sizes, each tailored to specific needs and workloads.

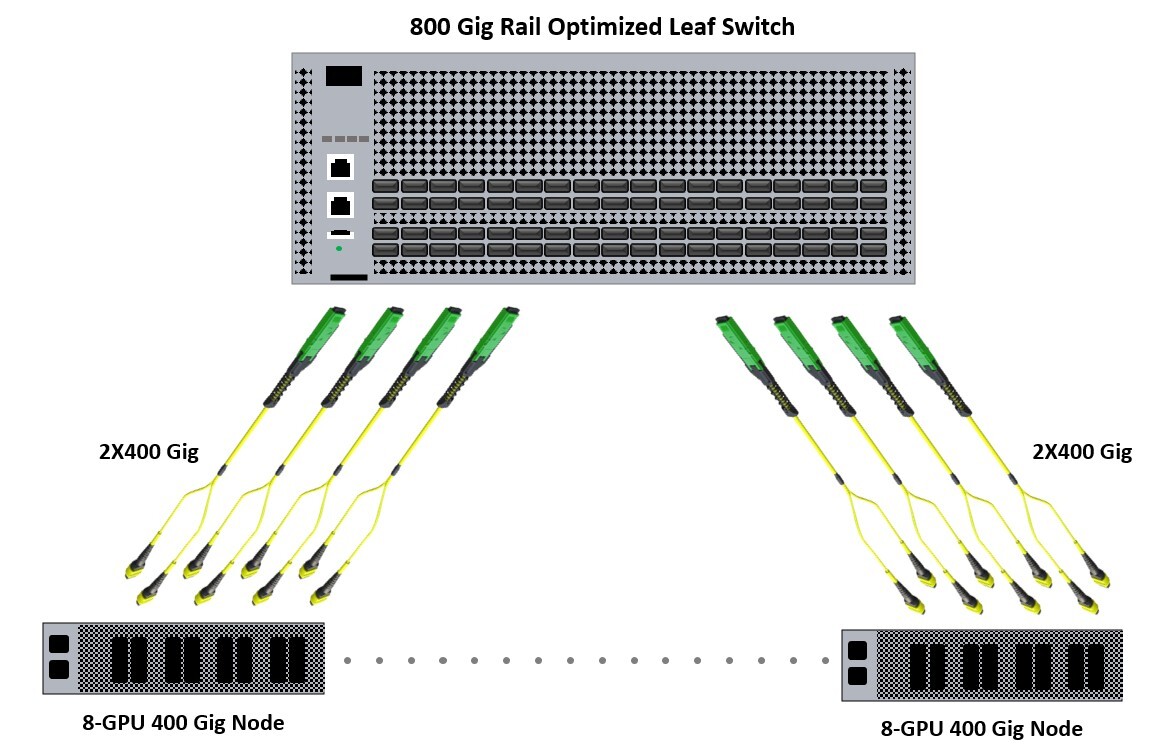

To achieve rapid and efficient training, all GPUs within a cluster interconnect through rail-optimized leaf switches at 400 or 800 Gigabit speeds. These connections often use technologies like InfiniBand with Remote Direct Memory Access (RDMA) technology, which offers lower latency than traditional Ethernet. Increasingly, RDMA over Converged Ethernet (RoCE) is gaining traction for its efficient, low-latency GPU communications on Ethernet networks. Beyond GPU connections, each node also requires Ethernet connections for storage and management (typically 100 Gig). Within backend networks, leaf switches within each cluster interconnect via higher-level spine switches at 800 Gigabit or 1.6 Terabit Ethernet (2X800 Gig).

The inference phase of GenAI is where a trained model is essentially put into action, applying what it learned to answer user queries or draw conclusions from new information. Inference is far less compute-intensive than training and typically happens in more traditional frontend data center networks that connect systems to the outside world and handles general workloads like web hosting. To send inference requests to and receive results from the AI backend network, frontend networks commonly use 200, 400, or 800 Gigabit Ethernet switch links to the backend. A robust and reliable frontend network is essential for delivering fast responses to AI inference requests from users and devices.

Connectivity Options for GPU Clusters

Interconnected GPUs in centralized clusters typically connect directly to leaf switches using point-to-point cabling, minimizing latency and signal loss for optimal GPU-to-GPU communication. These point-to-point connections can be achieved with various cabling options:

- Direct Attach Copper (DAC) Cables: Passive or active pre-terminated twinax copper cables with fixed pluggable transceivers on both ends.

- Active Optical Cables (AOCs): Pre-terminated fiber optic cable with integrated transceivers on both ends.

- Multimode or Singlemode MPO/MTP cables with separate transceivers: Traditional fiber optic cables with MPO/MTP connectors that require additional transceivers to function.

The primary factor in selecting the right solution for GPU connectivity is the distance between leaf switches and GPUs. Other considerations include power consumption, latency, and scalability.

In small, centralized clusters where leaf switches reside in the same cabinet as the GPUs, distances are 3 meters or less. These in-cabinet GPU connections are ideal for DACs that are limited to 3-meter distances but offer the lowest power consumption and latency. DACs are application and protocol specific, which can hinder scalability.

In larger centralized clusters where leaf switches reside in a separate cabinet within a row of GPU cabinets, distances vary based on the size of the row. A small row of just a few cabinets might have distances of less than 10 meters between leaf switches and GPUs, while larger rows can reach closer to 100 meters. AOCs and multimode MTP/MPO cables support up to 100 meters for these distances. Like DACs, AOCs are application and protocol specific, but offer lower latency and power consumption than multimode MTP/MPO cables with separate transceivers. However, MTP/MPO fiber cables support all speeds and both InfiniBand and Ethernet protocols for easier scalability.

It's important to note that while AOCs and MTP/MPO cables can be used for point-to-point connections to 100 meters, when distance exceed around 50 meters, direct links can be difficult to deploy and manage. For longer distances, structured cabling using MTP/MPO cables with interconnects and cross-connects facilitates deployment and offers better flexibility and manageability. Multimode MTP/MPO fiber cables support up to 100 meters, while singlemode MTP/MPO fiber cables support up to 2000 meters or more. Structured cabling is also ideal for decentralized AI networks where GPUs are spread out due to power and space limitations, resulting in much longer connections to leaf switches.

GPUs that connect at 400 Gig typically connect to an 800 Gig leaf switch using 2X400G breakout cables to save space and maximize switch port utilization. DACs, AOCs, and MPO/MTP are all available as breakout cables to support this approach. In a structured cabling environment, 2X400G breakouts can also be achieved using cassettes.

Connectivity between leaf switches and spine switches in backend AI networks is typically 800 Gigabit or 1.6 Terabit Ethernet (2X800 Gig). Unlike GPU connections that are often point-to-point, connections between leaf and spine switches almost always leverage singlemode or multimode structured cabling with interconnects or cross-connects for flexibility, manageability, and scalability.

Additional Backend Network Considerations

While selecting the right connectivity for backend AI networks depends on the overall configuration, number of GPUs and clusters, and link distances, there are some key considerations.

Because GPUs can consume as much as 10 times more power than traditional CPUs, it’s not out of the question to see rack power densities in a centralized AI cluster reaching 100 kW or more. This necessitates advanced cooling solutions, often involving liquid cooling. As previously noted, some larger enterprise data centers may need to adopt a decentralized approach using structured cabling due to power and space limitations.

GenAI networks tend to have much higher densities than traditional data center networks. Imagine a single node with 8 GPUs that each require a high-speed 400 or 800 Gig connection. A cluster with just 200 nodes would require 1600 high-speed connections. In hyperscale and AI-focused neocloud data centers, AI clusters could easily have thousands of nodes. Multiple clusters are also common in these environments, and switch-to-switch links in the backend can be in the tens of thousands because each leaf switch connects to spine switches in a full-mesh configuration at 800 Gig or 1.6 Terabit (2X800 Gig).

GenAI networks tend to have much higher densities than traditional data center networks. Imagine a single node with 8 GPUs that each require a high-speed 400 or 800 Gig connection. A cluster with just 200 nodes would require 1600 high-speed connections. In hyperscale and AI-focused neocloud data centers, AI clusters could easily have thousands of nodes. Multiple clusters are also common in these environments, and switch-to-switch links in the backend can be in the tens of thousands because each leaf switch connects to spine switches in a full-mesh configuration at 800 Gig or 1.6 Terabit (2X800 Gig).

To support ultra-high-density connectivity, very small form factor (VSFF) 16-fiber MMC connectivity is a game changer. It offers three times more density than traditional MTP/MPO connectivity, significantly saving space in AI networks. An MMC-16 connector supports 800 Gig uplinks, or they can be double stacked in QSFP-DD800 and dual-row OSFP-XD form factor transceivers for 1.6 Terabit (2X800 Gig) switch links.

Beyond density, accurately measuring bit error rates in optical modules is paramount for ensuring reliable performance in high-performance computing environments. Data centers deploying backend AI networks need robust solutions capable of testing and verifying transceiver modules up to 800 Gig. Tools like the BERT 800G OSFP, QSFP-DD, and QSFP28 Bit Error Rate portable tester provide a flexible platform for testing 100 to 800 Gig transceivers commonly used in AI networks.

Beyond density, accurately measuring bit error rates in optical modules is paramount for ensuring reliable performance in high-performance computing environments. Data centers deploying backend AI networks need robust solutions capable of testing and verifying transceiver modules up to 800 Gig. Tools like the BERT 800G OSFP, QSFP-DD, and QSFP28 Bit Error Rate portable tester provide a flexible platform for testing 100 to 800 Gig transceivers commonly used in AI networks.

While every data center is unique and there’s no one-size-fits all solution for AI clusters, the good news is that Cables Plus offers a complete line of data center solutions that include DACs, AOCs, and singlemode and multimode fiber to support any distance or topology in your AI networks. We also offer MMC ultra-high-density connectivity and 800 Gig testing solutions to save space and ensure peak performance. Contact us today for all your AI connectivity needs.

Explore Our Complete Range of Data Center Solutions Contact an Expert