Evolution of High-Speed Fiber Optics: 800G, 1.6T & 3.2 T

- Oct 9th 2025

Large hyperscale and cloud data centers are rapidly migrating fiber optic links to 800 Gigabits (G) and 1.6 Terabits (T) speeds. This move is necessary to meet the increasing demand from AI workloads and other high-performance computing applications.

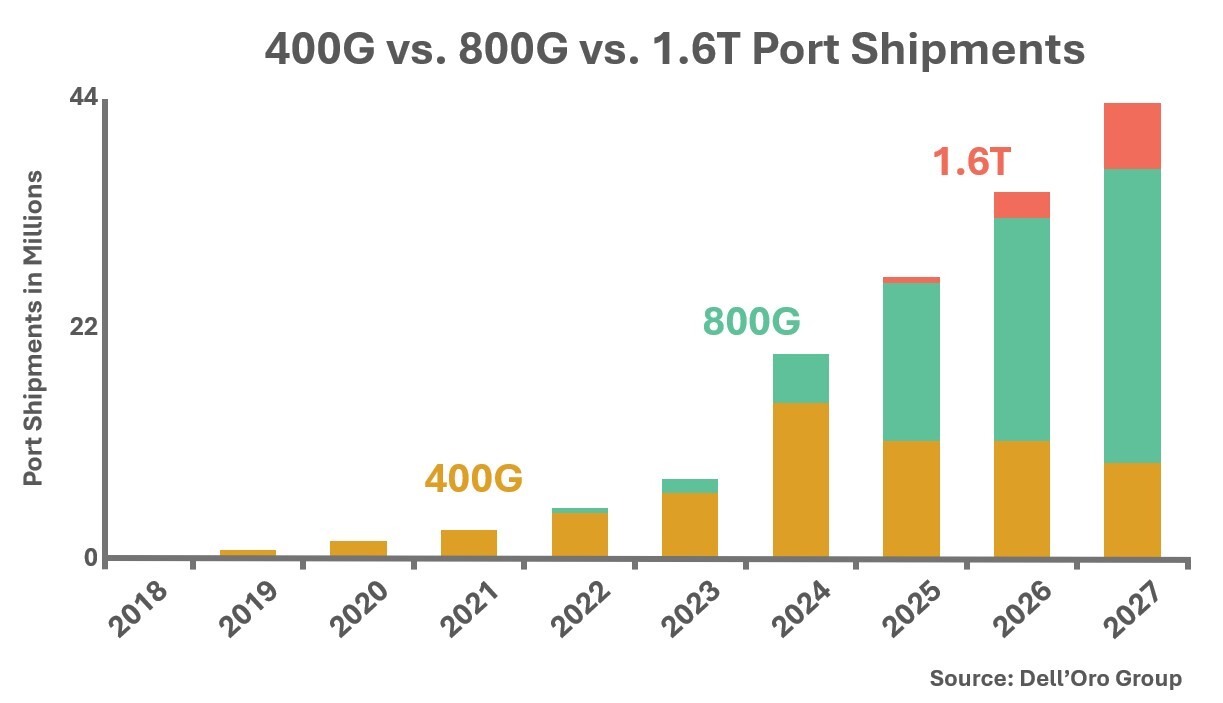

According to port shipment forecasts published by Dell'Oro Group, 800G is currently the fastest-growing segment of the market. Meanwhile, 1.6T is expected to ramp up considerably in 2026 and 2027. Now, major players like Amazon, Google, and Meta are gearing up for 3.2T speeds. Let’s examine the technology behind these speeds and what it means for the future of the data center.

Advanced Signaling Unlocks 800G

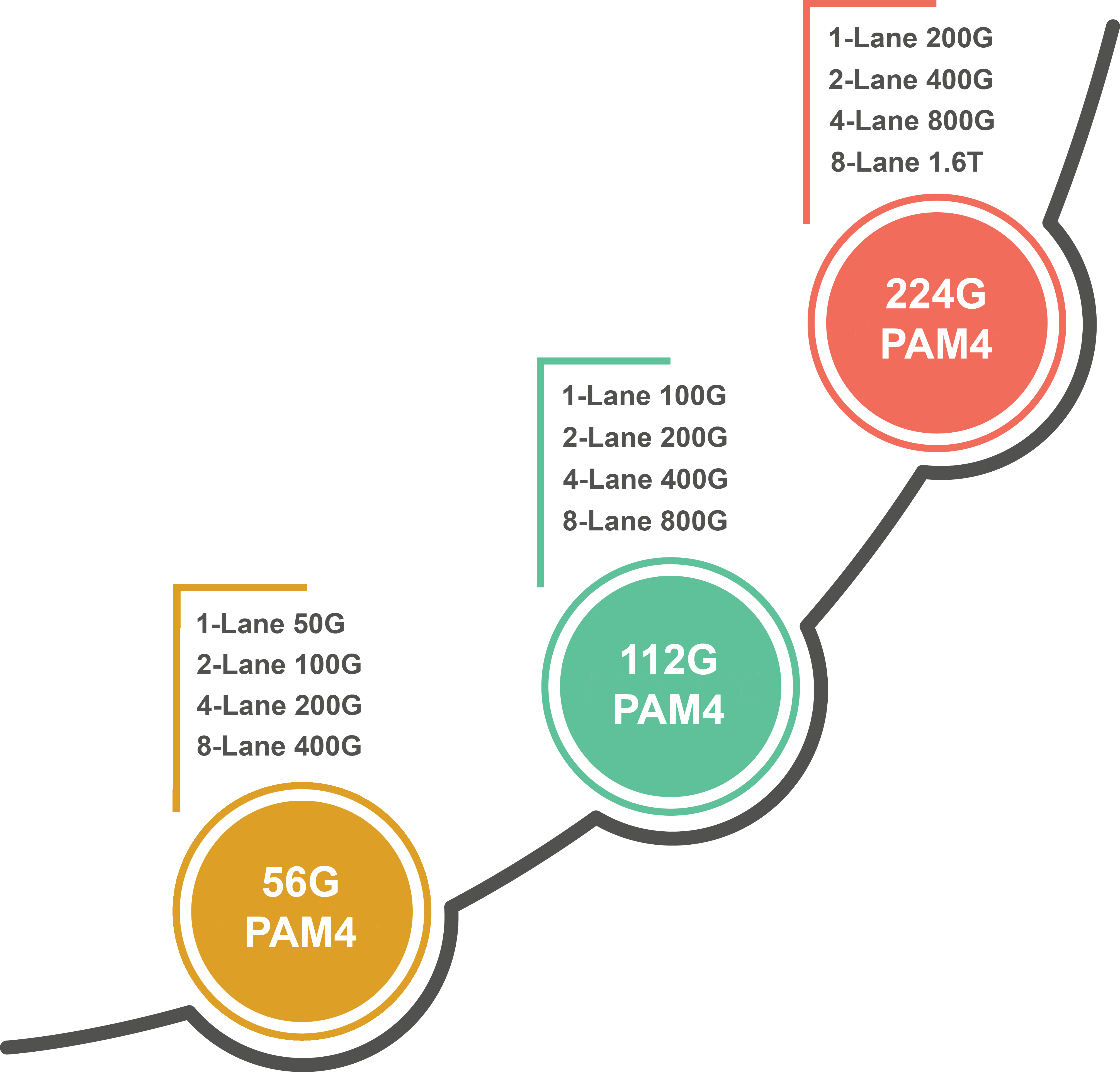

Achieving 800G was primarily based on the evolution of advanced four-level pulse amplitude modulation (PAM4) signaling technology.

Initial PAM4 technology doubled the data rate of previous non-return-to-zero (NRZ) signaling by adding more voltage levels. This allowed PAM4 signaling to achieve 56G per lane compared to 28G for NRZ at the same baud rate. While more voltage levels enabled PAM4 to achieve a higher lane rate, it also required more complex transceivers that consume more power. Since the voltage levels are closer together, PAM4 is also more susceptible to noise and requires forward error correction (FEC) to operate reliably.

The introduction of a 56G late rate using PAM4 technology marked a significant milestone in achieving early 200G implementations over four lanes. This included 200GBASE-SR4 over 100 meters of OM4 multimode using 8-fiber MPO/MTP connectivity, which the IEEE 802.3cd standard ratified in 2018. That same standard also ratified 50G Ethernet (50GBASE-SR) over a single lane and 100G Ethernet (100GBASE-SR2) over two lanes. Later, PAM4 paved the way for 400G implementations using eight lanes, such as 400GBASE-SR8 over 100 meters of OM4 multimode fiber using 16-fiber MPO/MTP connectivity, which the IEEE 802.3cm standard ratified in 2020.

Since the development of 56G per lane PAM4, digital signal processing (DSP) technology has advanced to correct noise, dispersion, and other distortions that occur during signal transmission. These developments enabled an increase to 112G per lane. This became the foundation for the following 400G applications over four lanes using 8-fiber connectivity and 800G applications over 8 eight lanes using 16-fiber connectivity, commonly found in today’s data centers:

- 400GBASE-SR4 to 100 meters over OM4/OM5 multimode fiber using 8-fiber connectivity

- 400GBASE-VR4 to 50 meters over OM4/OM5 multimode fiber using 8-fiber connectivity

- 400GBSE-DR4 to 500 meters over singlemode fiber using 8-fiber connectivity

- 400GBSE-DR4-2 to 2000 meters over singlemode fiber using 8-fiber connectivity

- 800GBASE-SR8 to 100 meters over OM4/OM5 multimode fiber using 16-fiber connectivity

- 800GBASE-VR8 to 50 meters over OM4/OM5 multimode fiber using 16-fiber connectivity

- 800GBSE-DR8 to 500 meters over singlemode fiber using 16-fiber connectivity

- 800GBSE-DR8-2 to 2000 meters over singlemode fiber using 16-fiber connectivity

The Leap to 1.6T and 3.2T Speeds

As history has shown, technology continues to advance—and it’s happening at an increasingly rapid pace. The industry has already successfully achieved 224G per lane PAM4 signaling via additional DSP techniques, even before 112G lane rates have become the market leader.

224G per lane PAM4 signaling reduces the number of lanes for 800G to four (8 fibers) and enables 1.6 T speeds over eight lanes (16 fibers). Switch manufacturers are already leveraging this advancement to offer 1.6T transceivers.

224G per lane PAM4 signaling reduces the number of lanes for 800G to four (8 fibers) and enables 1.6 T speeds over eight lanes (16 fibers). Switch manufacturers are already leveraging this advancement to offer 1.6T transceivers.

The upcoming IEEE 802.3dj also leverages 224G per lane PAM4 and is expected to be released by mid-2026, with the following 800G and 1.6T applications:

- 800GBASE-DR4 to 500 meters over OS2 singlemode fiber using 8-fiber MPO/MTP connectors

- 800GBASE-DR4-2 to 2000 meters over OS2 singlemode fiber using 8-fiber MPO/MTP connectors

- 1.6TBASE-DR8 to 500 meters over OS2 singlemode fiber using 16-fiber MPO/MTP connectors

- 1.6TBASE-DR8-2 to 2000 meters over OS2 singlemode fiber using 16-fiber MPO/MTP connectors

Implementations of 1.6T speeds will primarily support data center interconnects and backbone switch-to-switch links in high-performance computing environments. They will also likely be used for GPU connections in AI clusters, leveraging 2X800G breakout applications. It will also support 4X400G and 8X200G switch-to-server links.

224G per lane signaling will support initial 3.2T deployments using two 1.6T channels. However, efforts within multi-source agreement (MSA) groups and industry forums, such as the Optical Internetworking Forum (OIF), are now targeting 448G PAM4 technology. This is no surprise since Amazon, Google, and Meta are all key members of these efforts that are pushing for higher speeds to support AI and cloud workloads. 448G PAM4 technology will enable 1.6T over four lanes (8 fibers) and 3.2T over eight lanes (16 fibers). This might happen sooner than you think—the technology was already demonstrated at global networking events in 2025.

How Will It Impact the Data Center?

8-fiber and 16-fiber connectivity to support 800G, 1.6T, and 3.2T speeds are already well established via MPO/MTP connectors and very small form factor (VSFF) MMC connectors. Data centers that deployed 8-fiber MPO/MTP connectivity for 100G (utilizing 28G per lane NRZ signaling) were able to transition to 200G (using 56G PAM4) and later to 400G (utilizing 112G per lane PAM4) without requiring significant infrastructure upgrades. Similarly, those that deployed 16-fiber MPO/MTP connectivity for initial 400G implementations (using 56G per lane PAM4) were able to transition to 800G (using 112G per lane PAM4).

While it seems that 224G per lane PAM4 should allow these same data centers to leverage existing 8-fiber connectivity to support 800G and 16-fiber connectivity to support 1.6T, that’s not necessarily the case. Industry standards groups are currently focused only on singlemode fiber for 224G per lane signaling. Although there is some speculation that multimode fiber will eventually support 224G per lane signaling, the distances are likely to be less than 100 meters, which could require reducing link lengths. Signal reflections will have an even greater impact, necessitating the use of APC multimode connectors.

Data centers with existing 8-fiber and 16-fiber singlemode connectivity will be able to transition to 800G and 1.6T using 224G per lane signaling without major infrastructure upgrades. However, at 224G per lane signaling and future 448 per lane signaling, transceivers will be highly complex and significantly increase energy consumption. Rack power densities could reach 100 kilowatts or higher in some deployments, requiring upgrades to facility power and rack power delivery. These power densities exceed the capacity of traditional air cooling, further underscoring the need for advanced liquid cooling strategies, such as direct-to-chip and immersion cooling. Future 448G per lane signaling could also drive the need for power-reducing designs, such as onboard optics (OBO) and co-packaged optics (CPO) that move the electro-optic conversion process closer to the switch chip.

The good news is that Cables Plus offers a full range of 8- and 16-fiber MPO/MTP and VSFF MMC singlemode connectivity to support 800G, 1.6T, and future 3.2T. Our team can work with you to determine the best fiber connectivity for reliably scaling your data center infrastructure into the future. Contact us today for all your fiber needs.

Explore Our Complete Range of Fiber Solutions Speak to an Expert